With the advancements in artificial intelligence, machine learning, and deep learning, the demand for high-performance computing is escalating. And where there is demand, suppliers don’t take longer before coming up with a solution.

NVIDIA offered a solution to such demands with its recent invention, H200.

It is atypical to other GPUs. It has a power that we have never seen before. But there is a catch: the raging power that this GPU requires to run is not something individuals or scientists can handle in a non-dedicated or small workspace.

Technically, handling such a monster would require compatible servers, robust cooling systems, high-efficient power supply, software and licensing, and much more. Accommodating all that, that’s thousands of dollars. Considering the electricity costs, this doesn’t sound like a very lucrative option.

We have a solution for you!

Using our affordable GPU colocation—a high-performance service, you can easily leverage the power of the most advanced GPUs (like H200) without having to worry about management costs. More on that later.

H200 Power Consumption: Can You Handle It?

Handling the power configuration for AI computing requires robust infrastructure to support the intense demands of GPUs like the H200. And this is certainly not everyone’s cup of tea. Widely known as one of the most powerful GPUs, it is optimized for core high performance and AI tasks.

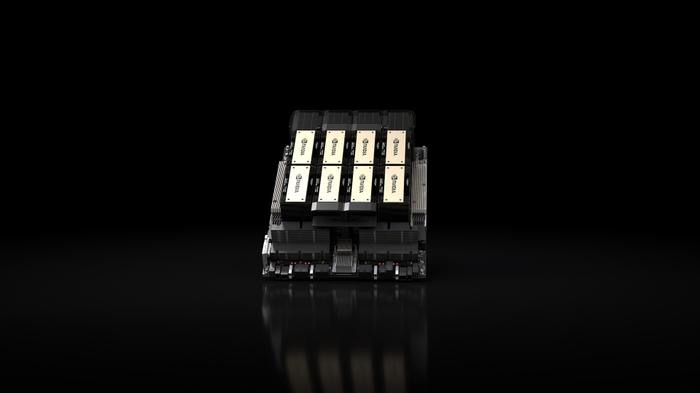

This HGX high-performance GPU is equipped with numerous memory tensor cores that allow it to perform super complex and computational-intensive tasks rapidly using parallel qualities.

The power-hungry H200 is the first GPU with HBM3E memory—HBM3E is an enhanced version of HBM3, designed to offer improved performance, increased bandwidth, and better efficiency for applications that require high data throughput, such as artificial intelligence (AI), machine learning, and high-performance computing (HPC).

Discussing numbers, the machine has a Thermal Design Power (TDP) of 700W. Point to be noted, this gigantic heat production and power consumption is calculated under normal operating conditions. If the condition worsens, the intensity of power consumption can increase as well.

This clearly accentuates the essence of system data centers and AI colocation that offers the infrastructure for such powerful machines to work, while the advanced cooling features provide the optimal temperatures for them to work efficiently.

Earlier, we discussed NVIDIA’s HGX series, which combines H200 Tensor Core GPUs with high-speed interconnects using NV Link, which enhances its power.

Consequently, it increases the power consumption as well. The total power requirements for HGX H200 4-GPU are 2.8kW and the requirements for HGX 200 8-GPU can go up to 5.6kW.

The powerful GPU is not destined for our casual day-to-day tasks. Considering their power consumption, using them for simple calculations can be very cost-intensive. Below is the complete information about the specifications of the NVIDIA H200 series.

NVIDIA H200 Complete Specifications

NVIDIA H200 is based on the Ada Lovelace architecture and uses Tensor Module technology, which is designed for HPC and AI-related tasks like training large language models, deep learning, inference, etc., The more you read about its specs, the more you will realize why can be considered the best GPU for AI.

The machines boast both CUDA and Tensor Cores enable data core TFLOPS allowing exceptional parallel processing that makes performing super complex tasks effortlessly by using all the cores in parallel.

For advanced deep learning tasks, the machine supports FP8, FP16, INT8, and other precision formats. Moreover, it utilizes bandwidth SMF optical connections to support its impressive data throughput, allowing rapid data transfer rates that are absolutely necessary for high-performance applications.

The machine possesses 141 GB of HBM3E memory—although the numbers can vary depending on the configurations—whereas, the NVIDIA memory bandwidth can go up to 4.8 TB/s.

We already discussed the astounding thermal design power (TDP), that can reach up to 700W, the role and purpose of data centers GPUs, and how they can help you leverage the best of machines without breaking the bank.

The machine also uses a PCIe Gen 5 interface for faster data transfer rates between the GPU and the motherboard. Moreover, these GPUs are explicitly optimized for artificial intelligence, which can be super helpful in training complex models.

Finally, this GPU is specially designed for data centers and enterprise use. The cost of infrastructure and management usually exceeds the limit of an individual. Thus, you can leverage options like our Houston data center and use the machines that fit your business needs.

No business should have to choose between cost-effectiveness or functionality. With us, you can power your business with machines as powerful as the H200, at super affordable prices.

That’s too much information, let us declutter it into a clean and concise table.

|

Feature |

Description |

|

Architecture |

Ada Lovelace |

|

Cores |

Contains both, CUDA and Tensor Cores |

|

Supported Precision Formats |

FP8, FP16, INT8, and others |

|

Memory |

141 GB HBM3e |

|

Memory Bandwidth |

Up to 4.8 TB/s |

|

Thermal Design Power (TDP) |

Up to 700W |

|

Specialties |

AI and machine learning training and inference, High-performance computing (HPC) applications, Large-scale data analytics, Scientific simulations and modeling, etc. |

Why H200 is a Game-Changer in High-Performance Computing

When comparing GPU vs CPU for AI tasks, GPUs like the H200 deliver unmatched parallel processing power which makes them ideal for machine learning and deep learning.

With thousands of GPUs out there, what makes it so special? In this section, we will learn how this exact model is the game changer for high-performance computing (HPC) and other AI-specific tasks.

Training deep learning models or large language models requires tons of data processing in a fraction of a second. The faster the data processes, the better it will be understood. Eventually, producing better results.

So, before the advent of this robust GPU, we had bandwidth constraints holding us back from performing tasks that would require energy and power that we had never seen before. Thus, training such models or performing such high intensive tasks was either too expensive or just not possible.

This new invention has propelled us to the light in the tunnel and exposed us to a whole new world of GPUs where any kind of project regardless of their power requirements could be tackled effortlessly. However, this sparks a debate about the unreal amount of energy consumption of the GPU.

You can always manage power with bits of amendments. We are going to show you how.

Effective Techniques For Power Management

Considering the immense power of the machine, here’s how you can effectively optimize it to use less power:

- Adjusting Performance Modes: Many GPUs allow for users to select one between less power consumption or performance. If the task does not require extremely high performance, you can sacrifice a bit of performance to obtain better energy-saving options.

- Efficient Cooling: We can not emphasize enough on the cooling aspect and how critical it is for a GPU’s performance. Cooling is an effective technique that can transform your GPU or CPU’s performance.

- Task Scheduling: Task scheduling is the practice of running less intensive tasks during peak hours or spreading them out can help balance energy usage. Allowing less energy usage for lighter tasks.

- Software Management: NVIDIA has dedicated power management tools and functionalities that can help adjust power levels by analyzing the workload and working the cores accordingly. Also, check out the software’s settings; most software has manual settings that you can experiment with to reduce the total GPU’s power consumption of the machine.

Key Takeaways

The NVIDIA H200 is a true powerhouse built for intense AI and high-performance computing. But this level of performance comes with significant energy demands. With a Thermal Design Power of up to 700W, and up to 5.6kW for an 8-GPU configuration, managing this GPU requires careful planning, especially considering its power consumption and cooling system needs.

This GPU is specially optimized for complex tasks. It handles large AI models, deep learning, and advanced HPC applications with ease. Its high memory bandwidth and tensor core architecture support core TFLOPS performance, making it ideal for high-performance computing environments. However, its high power and heat output mean that a robust cooling system and dedicated power supply are essential.

Running the H200 on your own can be expensive and challenging. This is where TRG’s data centers come in, providing affordable GPU colocation. You get access to the H200 without the heavy costs of setup and management.

Ready to harness the power of the H200 for your business? Get in touch with us and discover how our data centers can make it possible without breaking the bank.

How TRG Data Centers Can Help

This dramatic power needs an infrastructure to work. Put it in your room and you will feel like you have just landed on the scorching sun. Thus, it’s always wise to use all the measures that can help you create an optimal temperature for this beast to work.

Creating such an environment for an individual can be super costly. In fact, buying this machine can cost you more than $30K, let alone the robust cooling devices that can cost something pretty identical to the value of the entire machine.

For your rescue, TRG Data centers are here!

Using our Houston data center with 24/7 remote support, you can use the heaviest of machines in the friction of cost of the machine. Our years of experience has helped us create an amazing infrastructure for the most power-hungry machines. With us, you could use the heaviest of machines on your PCs using the power of cloud GPUs.

Frequently Asked Questions

How much power does H200 use?

The NVIDIA H200 Tensor Core GPU has a maximum TDP of 700W. This is the maximum amount of power consumption, not to mention, the unreal amount of heat it generates under normal operating conditions. H200 technology combines the power of multiple GPUs to create unprecedented computational power.

So, the total power requirements for the H200 system depends on the number of GPUs. For instance, the HGX H200 4-GPU takes about 2.8kW. Whereas, the HGX 200 8-GPU can go up to 5.6kW.

How much faster is H200 than H100?

The H200, the advanced machine, is much faster than the H100. Statistically, the H200 delivers up to 45% more performance for specific generative AI and other very laborious tasks.

Which GPU is strongest?

NVIDIA’s HGX H200 is known as the strongest GPU of the world. It combines multiple GPUs to perform tasks that can be harder to process for a single GPU. By combining the power of multiple H200 machines, this GPU can take down even the most complex tasks quickly.

Looking for GPU colocation?

Deploy reliable, high-density racks quickly & remotely in our data center

Want to buy or lease GPUs?

Our partners have H200s and L40s in stock, ready for you to use today