Imagine powering an entire household—or three—just to run a single GPU. That’s the power draw of NVIDIA’s H100, which demands up to 700 watts per unit. But don’t let the high wattage fool you: this beast of a machine can handle some of the most complex AI and high-performance computing tasks ever imagined, making it worth every watt.

However, with its significant power and cooling requirements, the H100 isn’t designed for personal workspaces. Running a GPU with this kind of power demand calls for an advanced infrastructure—one that can handle substantial energy loads, cooling, and resource allocation.

Thus, to use such robust machines, organizations turn to GPU colocation & high density colocation – things we excel at.

In this article, we’ll break down the H100’s impressive power demands, why they matter, and how TRG’s data center solutions make it easy for you to tap into its full capabilities without overwhelming your budget.

After all, the role and purpose of data center GPUs go far beyond personal computing; these powerful units are designed to accelerate complex workloads, from artificial intelligence to large-scale simulations.

So, are you ready to learn about the H100 and its impact on high-performance computing? Let’s get started!

Understanding the H100 Architecture: Why is it That Powerful?

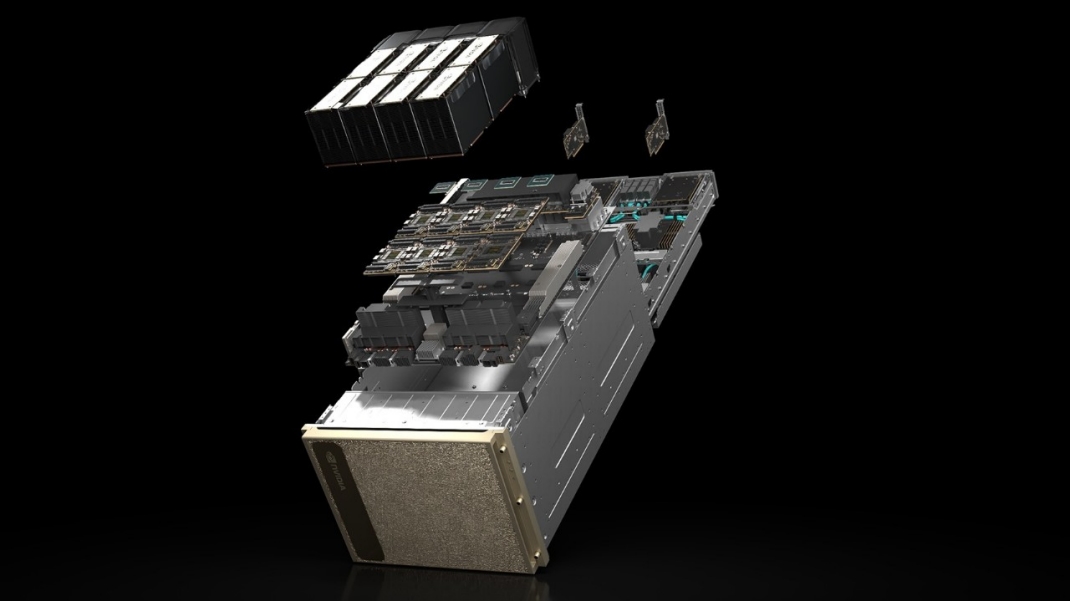

The NVIDIA H100 falls under the Hopper architecture, and it’s supposed to be targeted at the most demanding AI workloads. Its fourth-generation Tensor cores and MIG capabilities allow the H100 to handle workloads like large language models, deep learning, and advanced analytics. This is a power-hungry GPU built for efficiency, compared to its predecessors.

The H100 also presents a high power draw at about 700W per unit. To put this in perspective, this is equivalent to the power consumed by a handful of average American households. Thus, building an infrastructure for such a power-hungry GPU is not a very wise decision. We at TRG have a better and cost-effective choice for you. Using our AI colocation services, you can power your business with the most powerful GPUs at a fraction of the price they actually cost.

Increased source of power is one of the major design aspects of H100 power consumption, and with increased computation comes increased power needs.

The H100 consumes nearly twice as much power as its predecessor such as NVIDIA’s A100. But this one comes at the cost of double the potential throughput, which is more so in AI applications where massive data processing is required. The never-seen-before H100’s tensor core flops are destined to be used for advanced machine learning tasks, and they also smartly manage power usage.

H100 Power Efficiency in High-Performance Computing

The higher the processing power per unit (700W per GPU for the H100), the better the computation suited for more demanding applications in deep learning, data analytics, and even HPC tasks.

In the GPU vs CPU debate, GPUs like the H100 stand out for high-performance computing due to their ability to handle intense, parallel workloads efficiently. Thus, more power per unit will eventually mean faster data processing and lower long-run costs for our clients—like you.

The H100 supports multi-instance GPU technology. This means it can divide one or more GPUs into smaller isolated instances, allowing it to scale applications more efficiently across multiple clients while optimizing power use. Combined with parallel processing, it can tackle the most complex tasks in a remarkably short time.

H100: Use Cases

Considering its massive power load, you might think of it as the perfect GPU for gaming. However, upon its advent, NVIDIA clarified it is not a suitable GPU for gaming but for data centers. Therefore, if you are planning on playing video games, this one is not the best choice. Instead, this one excels at:

- Artificial Intelligence and Machine Learning: The NVIDIA H100 is great for working with huge amounts of data, thanks to its multi-instance GPU (MIG) abilities and fourth-generation Tensor Cores. These features help it process data faster, making it an excellent choice for AI-related tasks, especially when it comes to big projects like high-performance computing (HPC) and large language models. It’s widely regarded as the best GPU for AI in large-scale applications.

- Deep Learning: Built with Tensor Core GPU technology, the H100 can handle deep learning, where systems learn from tons of data without human input. This GPU is strong enough to process the massive data needed for deep learning, like for training models used in self-driving cars where quick decisions are super important. The H100’s power consumption allows it to use high memory bandwidth, which is perfect for data-heavy work.

- Data Analytics: With features like NVLink switch systems and PCIe Gen 5 bandwidth, the H100 makes it easier to work with and analyze massive data sets. It’s useful for businesses that rely on data analysis for growth, as it quickly processes data and offers results, thanks to NVIDIA’s Hopper architecture and DGX systems.

- Simulations and Graphics: While the H100 is mostly known for computing, it’s also good for creating detailed simulations and graphics. Industries that require heavy simulation work can benefit from the NVIDIA Tensor Core GPU with its ability to deliver high-quality renderings. The HDR network cluster support helps it handle complex graphics effectively.

- Data Centers and Cloud Applications: The H100 is designed more for companies and data centers than for personal use. It’s perfect for cloud applications because it’s compatible with PCIe SXM form factors and NVLink for fast data handling. The NVIDIA enterprise features make it easier to scale applications. If your organization needs high-performance setups, TRG Data Centers can provide the right environment for using powerful machines like the H100.

Benefits of H100 for High-Performance Computing (HPC)

Although the H100 power consumption is pretty high, its efficiency by watts makes it worthwhile. Some of the key benefits are:

- It’s Built for Heavy-Duty Jobs

The H100 is ideal for massive workloads like deep learning, data analytics, and other HPC tasks. Sure, it pulls a lot of power, but that energy translates to super-fast data processing. You’ll spend less time waiting and more time getting things done, saving time and reducing costs in the long run. - Multi-Instance Support (One GPU, Many Jobs)

Imagine dividing a single GPU into smaller “mini-GPUs.” That’s what the H100 can do with multi-instance support. It lets data centers run multiple applications or serve different clients using just one GPU. It’s efficient and pretty impressive too. - Work Smarter, Not Harder

With the H100, you can run multiple workloads on one GPU at the same time. Data centers don’t need tons of extra hardware; this single powerhouse can handle diverse tasks all at once, making everything smoother and more streamlined. - Lightning-Fast Data Access

Thanks to its high memory bandwidth, the H100 allows super-fast access to data. For AI and machine learning tasks dealing with massive data sets, this speed can make a huge difference. It’s like having a supercharged data pipeline. - Cost-Efficient, Even With High Power Draw

Even though it’s a beast with power, the H100 still helps keep long-term costs down. Its high efficiency means lower running costs for data centers, making it easier to scale up without burning through money. For companies, this is where high performance meets real savings.

In short, the H100 may be a power-hungry GPU, but it’s packed with benefits that make it a valuable asset for high-performance computing.

The NVIDIA H100 Role in Accelerating AI Workloads

Alright, the NVIDIA H100 isn’t your average graphics card. It’s a beast. Imagine a machine so powerful it could probably bench press a car (okay, not literally, but you get the idea). This thing is specifically built to handle the heaviest AI and high-performance computing tasks.

Here’s the deal: with NVIDIA’s fourth-gen Tensor Cores inside, the H100 can breeze through complex stuff—like deep learning, natural language processing, and even advanced simulations—in a way that’s hard to believe. It’s packing hundreds of teraflops, which, if you’re into numbers, means it’s insanely fast.

But powering this beast? Not the same as plugging in a toaster. You need a solid power configuration for AI workloads to keep it running efficiently. This is where TRG comes in. Setting up the H100 takes serious cooling, space, and energy infrastructure that isn’t exactly home-office friendly. That’s why hosting it with TRG’s data centers makes sense.

Think about it: with TRG, you get the full setup for power configuration for AI, allowing your H100 to run full speed without worrying about energy spikes or heat. We handle the heavy lifting; you focus on breaking new ground in AI. Sounds good, right?

Power Consumption Management for Cost Efficiency

When it comes to high-powered GPUs like NVIDIA’s H100, we’re talking about a power draw of around 700W per unit. That’s no small number. But with TRG’s Dallas data center, you can harness that immense power without overwhelming your resources—or your budget.

There are different ways a data center can use to manage power consumption effectively. The most effective method is the optimization of workload scheduling. Data centers can easily manage power usage by planning and distributing workloads to off-peak hours without necessarily using peak energy rates to save costs.

In addition, the data center will have to invest in advanced cooling. Air-cooled solutions would not be enough at the high temperatures the more dense H100 GPU cluster will produce. Rather than this, opt for liquid cooling. A liquid cooling system has advantages over air-cooled versions, as it produces cooler temperatures and is less energy-consuming due to the lack of necessity for extensive air conditioning.

Key Takeaways

The NVIDIA H100 is a powerhouse GPU, built to tackle heavy-duty AI and high-performance computing (HPC) tasks like deep learning, data analytics, and complex simulations. With a power consumption reaching up to 700W, it’s not for the average setup—this GPU needs robust infrastructure, especially for cooling and energy management. But with benefits like high memory bandwidth, multi-instance capabilities, and super-efficient parallel processing, the H100 transforms how data centers handle AI workloads.

At TRG, we offer the perfect solution for hosting these energy-intensive GPUs, with advanced cooling, optimized power distribution, and high-density configurations tailored for the H100’s demands. From lightning-fast data access to cost-effective power management, TRG’s data centers make high-powered computing achievable without breaking the bank.

Ready to unlock the full potential of AI with NVIDIA’s H100? Get in touch to explore how TRG can power your vision efficiently and effectively.

How TRG Can Help You With Your GPU Usage Needs

Managing GPUs like the NVIDIA H100 is no small task. These GPUs demand smart solutions for cooling, power management, and efficient use of space—all areas TRG is equipped to handle smoothly.

Cooling is crucial when it comes to high-density clusters. Traditional air cooling alone can’t keep up, so we’ve implemented advanced options like liquid and immersion cooling to keep things running cool and steady, even with the intense output of GPUs like the H100.

Power management also needs a careful approach. With tools like NVIDIA’s NVLink, TRG effectively distributes power across clusters, making sure energy is used efficiently and costs stay manageable.

And for space? We’ve developed configurations that maximize airflow and cooling, allowing multiple GPUs to run in compact setups without sacrificing performance.

In essence, TRG provides the infrastructure to help you make the most of powerful GPUs, supporting your AI and high-performance computing needs with reliability and efficiency. Looking to truly fulfill your GPU usage needs? Learn how you can use our Houston data center today!

Frequently Asked Questions

How much power H100 chip can consume?

The NVIDIA H100 GPU has a 700W power consumption level. This will depend on workload intensity and system configuration but is always above predecessors, such as A100.

What is H100 maximum power?

H100 is estimated to have roughly 700W of power, which is believed to help support heavy workloads in data centers and HPC setups.

How many watts is the HGX H100?

The HGX H100 system, with several H100 GPUs combined, consumes more than 2,000W in total, depending on configuration and workload demand.

What’s the TDP of H100?

The Thermal Design Power for the H100 is approximately 700W, which impacts cooling requirements and overall data center energy consumption.

Looking for GPU colocation?

Deploy reliable, high-density racks quickly & remotely in our data center

Lease the most reliable GPUs

Our partners have B200s and L40s in stock, ready for you to lease today