The NVIDIA H100 is a cutting-edge GPU chip based on the NVIDIA Grace-Hopper architecture. With 80 billion transistors and a unified memory that integrates CPU-GPU components, the H100 is designed for large-scale, high-performance workloads.

However, with H200 released and Blackwell on the way, is NVIDIA H100 a wise investment choice? Is purchasing worth it, given H100’s availability in the cloud?

This article explores H100 pricing, buying options, and why you should consider investing in this hardware.

Understanding NVIDIA H100 price

The NVIDIA H100 is available in two main configurations.

H100 SXM5 GPU

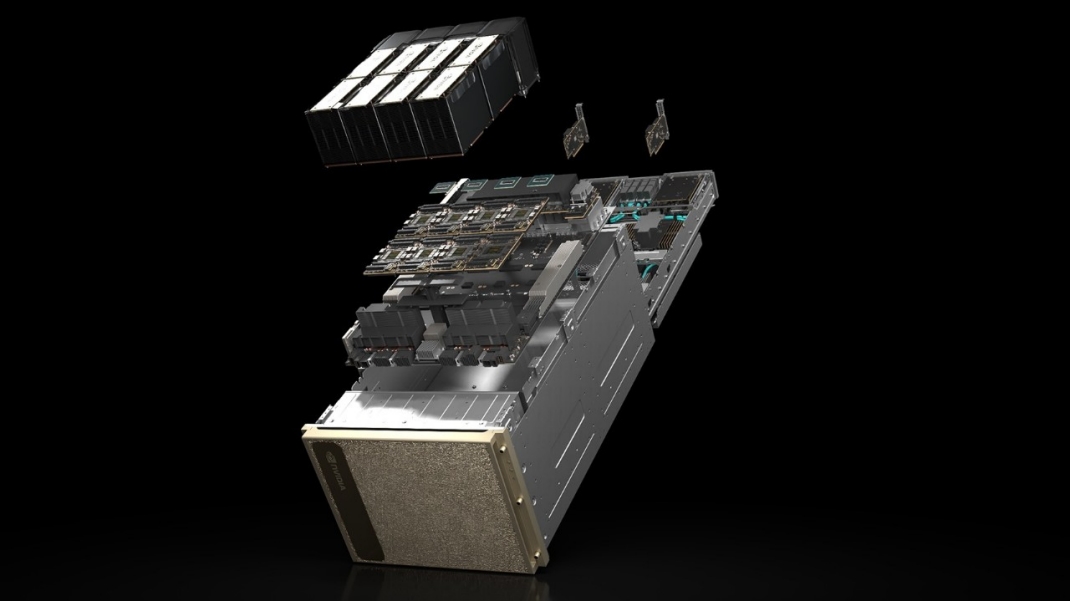

The H100 SXM5 configuration uses NVIDIA’s custom-built SXM5 board with H100

GPU, fourth-generation NVLink, HBM3 memory stacks, and PCIe Gen 5

connectivity. It is available through HGX H100 server boards with 4-GPU and 8-GPU configurations. Each GPU has 80 GB memory and 3.35TB/s memory bandwidth.

Pricing starts at US$27,000 for a single GPU and can increase to US$108,000 for four and US$216,000 for an eight-GPU board.

H100 NVL GPU

The H100 NVL GPU includes everything as SXM but better. Each GPU has 96 GB memory and 3.9TB/s memory bandwidth. The H100 NVL design has two PCIe cards connected through three NVLink Gen4 bridges. You can think of it as double GPU power in a single GPU. This means you get double throughput for half the power consumption (350-400 Watts instead of SXM 700W)

The price for a single GPU board starts at US$29000. You can choose from 1-8 GPUs in your custom server board—the price gradually increases to US$235,000.

A note about pricing

It is important to note that price varies by vendor. A single GPU can cost anywhere from $27,000 to $40,000 depending on vendor discounts on offer and customizations you choose. So, it’s really important to shop around for the best deals. Contact at least five vendors and get custom quotes for your requirements. You’ll be surprised at how much you can save. As H200 and Blackwell availability increases, the prices may reduce even more.

Why is the NVIDIA H100 so expensive?

The NVIDIA H100 is priced at $10,000-$15,000 more than the previous A100 model. It is a next-generation GPU architecture with several improvements over the previous Ampere design. In the H100 vs. A100 debate, H100 wins hands-down in performance, capabilities, and sustainability. It introduces the following new technologies.

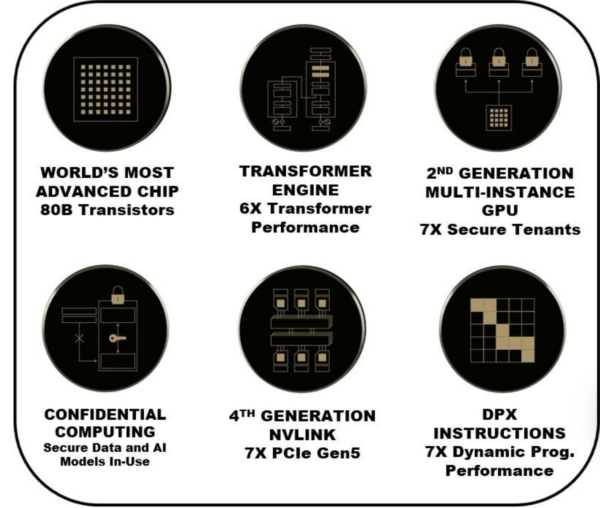

Transformer engine

The NVIDIA H100 introduces a new transformer engine to improve transformer model performance in AI. This engine combines software optimizations and custom Tensor Core architecture design for model training and inference. It can dynamically manage precision levels and handles recasting and scaling across model layers. You get up to 9x faster training and 30x faster inference speeds for large language models, making it the best GPU for AI.

Multi-Instance GPU

The H100’s second-generation MIG technology offers flexibility and performance for multi-tenant environments. Compared to its predecessor, it provides three times the compute capacity and two times the memory bandwidth per isolated GPU instance.

Memory improvements

The H100 features an HBM3 memory subsystem offering 3 TB/sec of memory bandwidth, nearly doubling the capacity of the A100. Additionally, the 50 MB L2 cache architecture ensures efficient data access by caching substantial portions of models and datasets, boosting overall performance.

Dynamic programming capabilities

With its new DPX Instructions, the H100 significantly accelerates dynamic programming (DP) algorithms, such as the Smith-Waterman algorithm for genomics and the Floyd-Warshall algorithm for optimal routing in robotics. It can achieve a performance boost of up to 7x over the A100.

Improved processing

The H100 introduces thread block clustering to optimize workload distribution and faster, more efficient computations. Asynchronous execution features facilitate efficient data transfers between global and shared memory, enabling advanced processing speeds and flexibility.

NVIDIA H100 price in the cloud

You can run your workloads on various cloud platforms and pay an hourly price for GPU usage. Depending on your chosen platform, the price range is $2 to $10 per GPU/hour.

However, it is important to note that these figures are highly misleading. If anything seems too good to be true, it usually is. You will not get a $120,000 server board for $10!

Let’s understand real daily/monthly/annual costs with this model. Firstly, discounted rates are only for the first 500-1000 hours. After that, the price usually goes up to $8-10/hour. Hence, our price analysis assumes the average cloud cost is $5/GPU hour. Let’s assume your workloads run on a 4-GPU server board, and usage is about eight hours/day. However, pricing is not just about how much your end users access the AI models. It’s also important to factor in the following additional time.

- Cold start time—the time it takes for a new application instance to become ready to handle requests, including the time for GPU allocation and initialization, library and driver loading, and CUDA environment setup.

- Model loading time—the time it takes to load the large language models, code, and related dependencies into GPU memory.

- Inference speed—the time your model takes to process user input and determine the best output in production.

- Input/output operations—the time it takes to download large datasets or files or write extensive outputs to the overall runtime.

In addition to the above, let’s assume your workloads run 16 hours daily. This is a conservative estimate, as many AI/HPC workloads run 24/7.

|

Advertised price – per GPU hour |

Price per hour for 4 GPUs |

Usage per day |

Price per day |

Price per month (25 business days) |

Price exceeds initial purchase cost ($120,000) |

|

$5 |

$20 |

16 hours |

$320 |

$8000 |

15 months |

|

$5 |

$20 |

24 hours |

$480 |

$12000 |

10 months |

Even with our conservative estimates, the price exceeds a 4-GPU board in just over a year. For 24/7 workloads, the price exceeds the initial purchase in just 10 months—even with downtime worked in! Read our Buying NVIDIA H100 GPUs beat AWS rental costs guide to learn more.

Maintaing H100 infrastructure

Purchasing GPUs upfront is much more cost-effective than paying forever in the cloud.

Setting up on-prem infrastructure is no longer stressful, thanks to our Houston data center and Colo+ service. We provide GPU colocation services, so you only purchase the GPUs, and we handle the rest. Your team doesn’t even have to visit us. Ship us your hardware, and we’ll manage everything from installation to rack setup and all future changes,

We provide a range of cooling and power options for all budgets. Our facilities are highly secure and well-protected from all types of disasters, including extreme weather like hurricanes and flooding.

Conclusion

NVIDIA H100 is a standout GPU worth investing in for running massive AI and HPC workloads. Anyone running continuous AI and HPC workloads should consider purchasing the H100. Organizations conducting scientific and medical research, building new AI applications, or adding AI/ML capabilities to existing applications can benefit from the H100. It offers faster performance and lower electricity bills than the A100.

If you are just starting new projects, H100 servers will last you a decade. The H200 offers the same capabilities with a little more memory, and the Blackwell is still a few years away from mass adoption. NVIDIA H100 remains a solid choice for organizations looking to run continuous AI/HPC workloads at scale.

FAQs

Is NVIDIA H100 available?

Yes, the NVIDIA H100 is available for purchase through NVIDIA’s authorized distributors and select retailers. It is offered in various configurations, such as PCIe and SXM, catering to different deployment needs in AI and HPC environments. Availability may vary depending on the region and supply chain conditions.

How much does one H100 cost?

The NVIDIA H100 GPU typically costs between $27,000 and $40,000 per unit, depending on the configuration (e.g., PCIe or SXM) and vendor pricing. Bulk purchases or enterprise agreements may offer some discounts, but the H100 remains a premium product targeted at high-performance computing applications.

How much is an H100 server in dollars?

The cost of an H100-powered server can range from $250,000 to $400,000, depending on the configuration, number of GPUs, and additional components like CPUs, memory, and storage. These servers are designed for advanced AI workloads and large-scale HPC environments and offer exceptional performance for their cost.

Looking for GPU colocation?

Deploy reliable, high-density racks quickly & remotely in our data center

Lease the most reliable GPUs

Our partners have B200s and L40s in stock, ready for you to lease today