The NVIDIA H200 is the latest NVIDIA GPU made using Hopper architecture. It is a memory-upgraded version of the H100 and offers significant performance optimization with reduced power consumption and running costs.

Despite offering 50% more performance improvement, the NVIDIA H200 is only slightly more expensive than the H100. So how much does it cost, and is it worth the investment?

This article explores NVIDIA H200 pricing and scenarios when the H200 purchase is optimal.

What makes the NVIDIA H200 stand out?

The NVIDIA H200 sets a new benchmark in Hopper architecture-based GPU performance. It can meet both performance and sustainability demands. Key features include:

Larger, faster memory

The NVIDIA H200 offers nearly double the GPU memory of the H100. (141GB instead of the previous 80-90 GB). GPU memory bandwidth has also been increased 1.5X times from 3.35TB/s to 4.8 TB/s. The significantly upgraded memory enables faster data access and improved bandwidth for data-heavy workloads. You get smoother and quicker processing without bottlenecks.

High-performing LLM inference

The H200 is designed to meet the demands of modern large language models. It offers up to 1.5x to 2x faster inference for 13B to 175B+ parameter models. It delivers speed and precision in inference tasks, making it a game-changer for organizations focusing on AI model development.

Supercharged high-performance computing

The H200’s high memory bandwidth enables faster data transfer and reduces complex processing bottlenecks in HPC applications like scientific research, simulations, and genomics. Efficient data access and manipulation gives 110X faster time to results.

Reduced Energy and TCO

The H200 has a configurable power profile of 600-700W, similar to the H100. However, its 50% performance boost means your existing workloads automatically consume 50% less power, reducing operational costs and total cost of ownership (TCO). It is a sustainable choice for data centers aiming to minimize environmental impact and energy expenses.

Understanding NVIDIA H200 pricing

The NVIDIA H200 is available in 2 configurations.

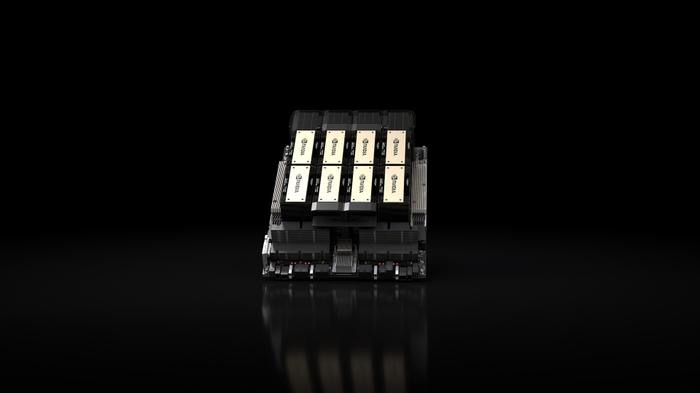

NVIDIA H200 SXM

The NVIDIA H200 SXM GPU comes on custom-built NVIDIA SXM boards in 4 or 8 GPU combinations. 900 GB/s NVLink interconnects the GPUs so all work together to provide high-speed performance. The power profile of the SXM is slightly higher at 700W, but the workload optimization it provides more than makes up for it.

A 4-GPU SXM board costs about $175,000.00, and an 8-GPU board costs anywhere from $308,000 to $315,000, depending on the manufacturer. A single SXM GPU chip is not available for purchase at the time of writing.

NVIDIA H200 NVL

The NVIDIA H200 NVL GPU offers the same memory and bandwidth as the SXM but a slightly better power profile at 600W. You also get a choice of 2-way or 4-way NVLink. A 2-way NVLink bridge connects two GPUs, but a 4-way NVLink bridge inter-connects four GPUs, offering more powerful parallel processing capabilities and allowing you to build cutting-edge multi-GPU systems.

A single NVL GPU card with 141GB of memory costs between $31,000 to $32,000. NVL H200 server boards must be custom-made from NVIDIA partners, and you can request the number of GPUs and NVLink connections. The price starts at $100,000 and can go up to $350,000, depending on your chosen configurations.

NVIDIA H200 pricing in the cloud

You can run your workloads on H200 cloud platforms. The price advertised is anywhere from $2-$10/GPU/hr. However, the pricing is often misleading.

Let’s consider some actual costs. Let’s look at a 4-GPU configuration assuming a 24-hour usage with downtime on Sundays.

|

Cost per GPU/hr |

Cost per hour for 4-GPUs |

Price per day |

Price per week (6 days) |

Price per year |

|

$3 |

$12 |

$288 |

$1728 |

$89,856 |

|

$5 |

$20 |

$480 |

$2880 |

$149,760 |

The cost of a 4 GPU SXM board is 170,000. You can see that your cloud costs will start exceeding any initial purchase cost in just over a year.

Hardware infrastructure investment is the most cost-effective option for organizations with AI/HPC workloads that run continuously and are estimated to scale over time. We encourage you to perform your cost calculations before making a decision. Read our Buying NVIDIA H100 GPUs beat AWS rental costs guide to learn more.

Maintaining H200 infrastructure

Investing in GPUs outright often proves far more economical than relying on perpetual cloud services. Building and maintaining an on-premises infrastructure is no longer necessary, thanks to our Houston data center. Simply ship your GPUs to us, and our team will handle the complete setup—from installation and racking to ongoing upgrades and adjustments. Our AI colocation services allow you to own and control your specialized AI hardware while offloading infrastructure management to experts.

Our Houston data center is equipped to meet diverse budgets with various cooling and power solutions that optimize GPU performance while minimizing costs. Security is our top priority—our facilities are safeguarded against physical and digital threats, including natural disasters like hurricanes and flooding. This combination of flexibility, reliability, and protection ensures your GPUs are in the best environment for uninterrupted operation.

What is the difference between NVIDIA H100 and H200 pricing?

The NVIDIA H200 costs a little more than the H100. See a pricing comparison below.

|

GPU type |

H100 cost |

H200 cost |

Price difference |

|

SXM 4-GPU |

110,000 |

170,000 |

50% |

|

SXM 8 GPU |

215,000 |

315,000 |

50% |

|

NVL Single GPU |

29,000 |

32,000 |

10% |

The price difference is mainly due to the increased memory capacity and better NVL link connections. You should remember to factor in ongoing operational costs, as the savings on electricity bills can be significant.

Switching from H100 to H200.

If you run your workloads on H100 SXM, the power savings by moving to H200 can be significant. But H100 NVL to H200 may not be that big a difference in practical terms. In that case, the prime reason for switching should be if you require performance optimization and your workloads have scaled the capacity of your existing H100 infrastructure.

Who should consider purchasing the NVIDIA H200?

If you are running your workloads on older NVIDIA A100 Ampere architecture—it is time to make the switch. There is a massive jump in features between H100 vs. A100—add to that the additional memory capacity of H200, and you will see a significant boost in performance and speed.

If you are investing in AI infrastructure for the first time, NVIDIA H200 is still the best choice. It should last you for the next decade as a sound infrastructure investment. It remains the GPU with the highest memory capacity – even the next GPU upgrade, Blackwell may not offer the same memory capacity.

However, consider holding out if you already have H100 and are not at capacity. Instead of the H200, you should wait for the next-generation NVIDIA Blackwell that promises more features for improved performance and enhanced AI capabilities.

Conclusion

The NVIDIA H200 remains the best AI infrastructure on the market today. It offers the biggest memory capacity and highest performance optimization at the lowest speed-cost ratio. A one-time purchase sees an ROI within one year, and ongoing operational costs can be significantly reduced using TRG GPU co-location services.

FAQs

How much does the H200 chip cost?

The NVIDIA H200 chip’s estimated cost is around $32,000 per unit, depending on configurations and bulk purchasing terms. This price reflects its advanced performance capabilities and increased memory capacity, making it a cost-effective option compared to its predecessor, the H100.

What is the NVIDIA H200 used for?

The NVIDIA H200 is designed for demanding generative AI, large language model inference, and high-performance computing workloads. Its advanced HBM3e memory and improved bandwidth make it ideal for applications like scientific simulations, GPT model inference, and other data-intensive tasks.

How much does the NVIDIA AI rack cost?

The cost of an NVIDIA AI rack with multiple 8-GPU H200 boards can exceed $600,000, depending on the configuration, and any included networking and storage solutions. Pricing varies with custom setups and additional enterprise services.

Looking for GPU colocation?

Deploy reliable, high-density racks quickly & remotely in our data center

Want to buy or lease GPUs?

Our partners have H200s and L40s in stock, ready for you to use today